Load Balancer

We previously introduced how to build a cluster of instances in the Service Cluster topic. In this lecture, we’ll explore how to expose a service to the outside world.

Load Balancer

Reverse Proxy Pattern

When running a cluster of service instances, these instances typically reside on different machines with distinct addresses. Moreover, instances can be dynamically added or removed. As a result, it’s impractical for clients to directly communicate with individual service instances.

Reverse Proxy is a pattern that exposes a system through a single entry point, concealing the underlying internal structure. Following this pattern, service instances are placed behind a proxy that forwards traffic to them. This proxy should be a fixed and discoverable endpoint, often achieved through DNS.

Load Balancing

Proxying alone isn’t enough. To efficiently utilize resources, we want to distribute traffic evenly across the service instances.

For example, one instance might be handling 4 requests while another processes only 1, clearly an imbalance.

To solve this, we add the load balancing capability to the proxy component, which we refer to as a Load Balancer . For example, the load balancer evenly distribute traffic across the cluster.

Service Discovery

A Load Balancer needs to be aware of the available service instances behind it. The most common approach is to implement a central Service Discovery system to track all instances.

In this setup, service instances must register themselves with the Load Balancer , which otherwise has no inherent knowledge of their existence.

Health Check

To ensure only healthy instances receive traffic, the Load Balancer periodically performs health checks and removes unhealthy ones from the pool.

Load Balancing Algorithms

Several algorithms can be used to select a service instance from a cluster.

Round-robin

The Round-robin algorithm is the most common and often the default option in many load balancing solutions. It cycles through the list of instances in order, assigning each new request to the next instance in sequence.

This method works well for short-lived, similarly sized requests, such as HTTP requests.

However, if the workload varies significantly, problems can arise.

For example, if Instance 2 is already overwhelmed with ongoing requests, the load balancer will still continue to send it new requests in turn, while other instances may be underutilized.

Least Connections

The Least Connections algorithm selects the instance currently handling the fewest active connections. This requires the load balancer to track the number of in-flight requests on each instance.

Is this better than Round-robin?

Not necessarily, because the number of active connections doesn’t always reflect the actual resource consumption.

For example, 10 requests on Instance 1 might use just 1 MB of memory, while 3 requests on Instance 2 could consume 100 MB.

This strategy shines for long-lived sessions (like WebSocket ), where client sessions persist on the same server for extended periods. In such cases, Round-robin can easily lead to imbalance, making Least Connections a better choice.

Session Stickiness

Load balancing algorithms typically decide which server should handle each request. However, this can be overridden with Session Stickiness.

When a client first connects, the load balancer assigns a stickiness key and returns it in the response:

- The client stores this key locally.

- For subsequent requests, the client includes the key, ensuring it connects to the same instance.

Why is this necessary? For stateful applications like multiplayer games or chat services, clients often need to consistently interact with the same server instance. For example, reconnecting to the same session after a temporary disconnection.

However, this comes at a cost. Session stickiness can easily lead to uneven load distribution, as it bypasses the load balancer’s configured algorithm in favor of sticking with a specific instance.

Load Balancer Types

There are two common types of Load Balancer : Layer 4 Load Balancer and Layer 7 Load Balancer . They define which network layer the load balancing occurs at.

OSI Review

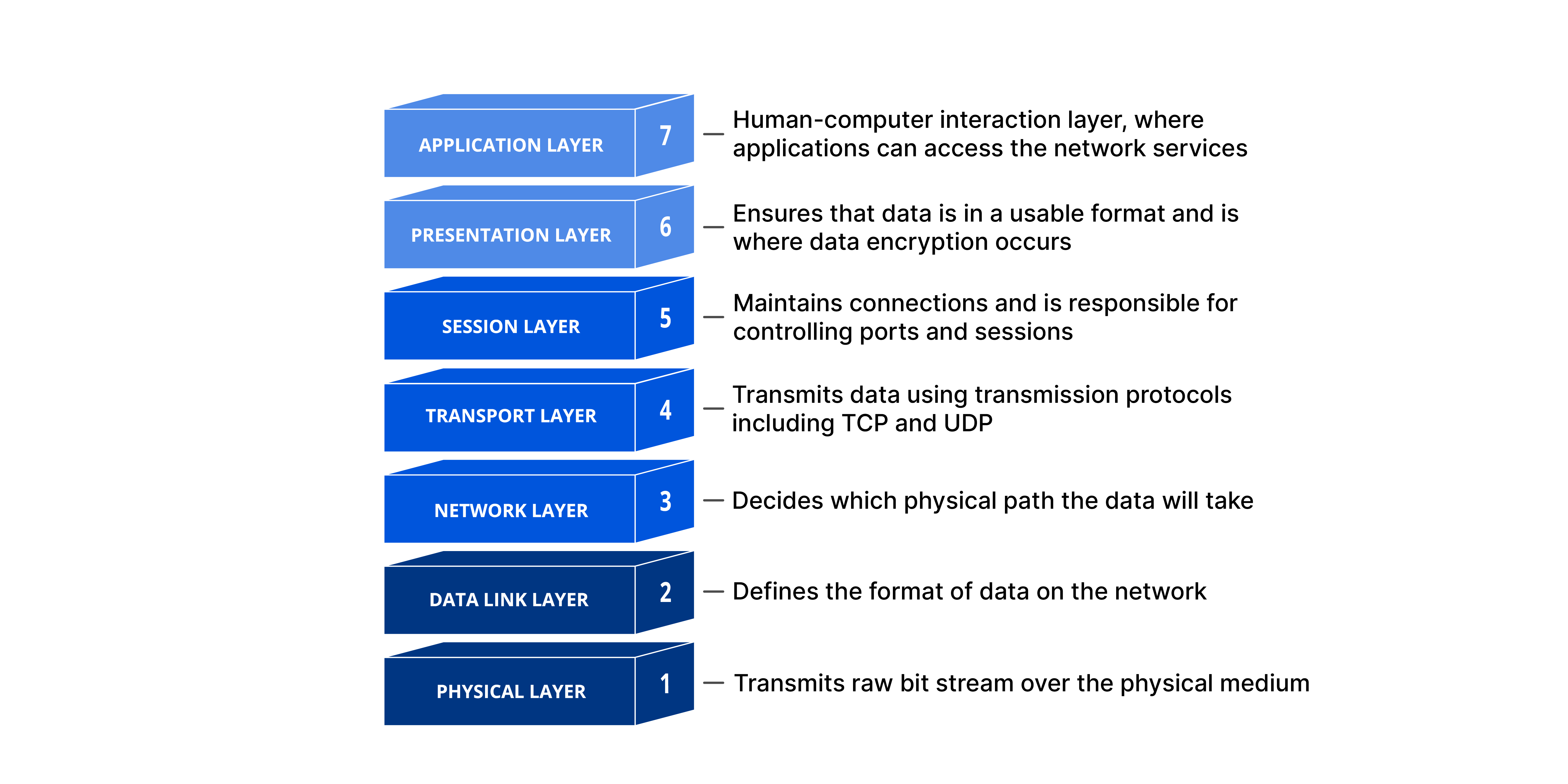

Briefly, a network message’s journey through a machine can be explained via 7 layers in the OSI model.

This layered design helps separate concerns, each layer has distinct responsibilities, operates independently, and can evolve autonomously. In this topic, we’ll focus solely on the Application, Transport, and Network layers.

Encapsulation

When a process sends a message to another machine, it gets steadily encapsulated, transforming from plain text into a network message:

- Application Layer (L7): the application formats the message using its specific protocol (e.g., HTTP).

- Transport Layer (L4): the machine attaches the port number to the message.

- Network Layer (L3): the machine adds its address to the message.

As the message moves down, it’s enriched with networking information at each layer. Notably, lower layers cannot interpret or modify the data encapsulated by higher layers.

Decapsulation

On the recipient side, the message undergoes decapsulation, moving upward through the layers:

- Network Layer (L3): reads and strips off the address.

- Transport Layer (L4): reads the port number and routes to the correct application.

- Application Layer (L7): interprets and processes the protocol-specific message

Layer 7 Load Balancer

A Layer 7 Load Balancer operates at the Application Layer (L7) of the OSI model, handling protocols like HTTP or WebSocket .

This high-level position allows it to inspect application-specific details, like HTTP headers, parameters, and message bodies, letting it make intelligent routing decisions. Technically, two separate connections are established:

- Between the client and the load balancer.

- Between the load balancer and the service.

API Gateway Pattern

API Gateway is a design pattern providing a single entry point for all external clients. It acts as a proxy ahead of load balancers:

Operating multiple load balancers increases management complexity. A more preferred solution combines the gateway and load balancer, sharing infrastructure and using routing rules to direct traffic based on criteria like domain, HTTP path, headers, or query parameters.

For example:

- Requests to

/aare routed toService A. - Requests to

/bare routed toService B.

SSL Termination

A major challenge with Layer 7 Load Balancer is handling encrypted traffic via SSL/TLS. Since Layer 7 Load Balancer needs to read application-level data to make decisions, it cannot work directly with end-to-end encryption.

In other words, we can’t use Layer 7 Load Balancer to ensure complete end-to-end encryption. To make it work, the SSL/TLS decryption must be shifted to the Load Balancer itself. This process is known as SSL Termination .

New connections are then established internally to forward plaintext traffic to services

Security Concern

This introduces a security risk: decrypted data resides at the load balancer, potentially exposing sensitive information.

In some compliance and data governance contexts, data must remain encrypted all the way to its destination service. Additionally, using an external load balancer for SSL Termination can lead to data leakage outside your trusted environment.

Layer 4 Load Balancer

A Layer 4 Load Balancer operates at the Transport Layer (L4) of the OSI model. It cannot inspect application-level content; routing decisions are based solely on the destination address and port.

Essentially, a Layer 4 Load Balancer acts like a network router between clients and services. Once a client connects to a server, it keeps communicating with the same instance as long as the connection stays open.

This problem arises from packet segmentation, where large messages are split into multiple network packets (aka TCP segments).

For example, an HTTP request is split into two network segments. A Layer 7 Load Balancer can understand protocols like HTTP and reassemble segmented requests before forwarding them.

Conversely, a Layer 4 Load Balancer is unaware of application protocols and may accidentally distribute segments of the same request to different servers, leading to errors.

The solution is to forward all segments of a connection to the same server until it disconnects.

Why choose a Layer 4 Load Balancer over a Layer 7 Load Balancer ?

- It avoids SSL Termination , which can be a security risk.

- It delivers significantly better performance, since it simply forwards packets without interpreting them.

However, due to the sticky connection behavior, a Layer 4 Load Balancer can easily become unbalanced, one server might receive a disproportionate load while others stay underutilized. Still, it’s a solid choice for stateful, high-performance services like multiplayer gaming backends.